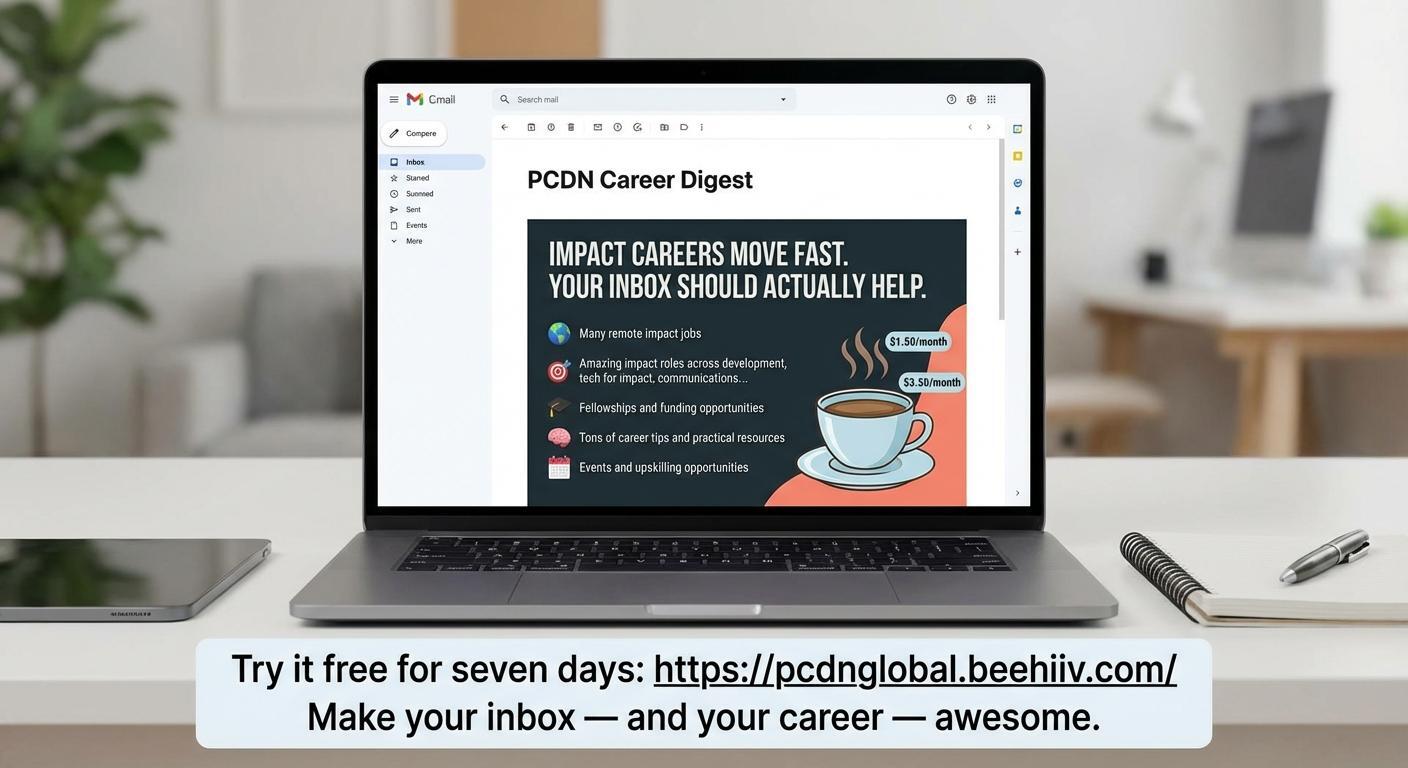

Impact careers move fast.

Your inbox should actually help.

The PCDN Career Digest is delivered six days a week.

Human-curated for people navigating social impact careers.

Get 250+ amazing human-curated impact opportunities every month (including many for techies and non-techies).

Inside each edition:

🌍 Many remote impact jobs

🎯 Roles across development, tech for impact, communications, innovative finance, and more

🎓 Fellowships and funding opportunities

🧠 Career tips and practical resources you can actually use

📅 Events and upskilling opportunities

Pricing stays accessible:

$1.50/month for students

$3.50/month for professionals

Less than the cost of a coffee a month.

Cancel anytime.

Try it free for seven days:

https://pcdnglobal.beehiiv.com/

Make your inbox — and your career — awesome.I

AI for Impact Opportunities

AI-native CRM

“When I first opened Attio, I instantly got the feeling this was the next generation of CRM.”

— Margaret Shen, Head of GTM at Modal

Attio is the AI-native CRM for modern teams. With automatic enrichment, call intelligence, AI agents, flexible workflows and more, Attio works for any business and only takes minutes to set up.

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

Your readers want great content. You want growth and revenue. beehiiv gives you both. With stunning posts, a website that actually converts, and every monetization tool already baked in, beehiiv is the all-in-one platform for builders. Get started for free, no credit card required.

Where AI and Impact Might go in 2026?

I asked an AI to predict the future of the human workforce in 2026. It paused for a second and then sent me a generated image of a PhD graduate solving a CAPTCHA to prove they aren't a robot—just so the robot could log in and do the actual work.

Welcome to the edge of 2026, where the AI revolution has moved from "potential" to "deployed" and the results are messier, more uneven, and more consequential than anyone predicted. If 2025 was the year of pilots and proofs-of-concept, 2026 is the year the engine actually starts—and it's making a noise we haven't heard before.

The "AI for Good" narrative is collapsing under the weight of its contradictions. We are now in the era of deployment without accountability, displacement without transition plans, and digital pollution accelerating faster than anyone can clean it up. The impact sector—nonprofits, social enterprises, foundations, multilaterals—is not immune. In fact, we may be more vulnerable precisely because we've been slower to recognize what's happening.

Here are five critical, data-backed shifts defining AI and social impact right now. These aren't predictions. They're already happening.

1. The "Agentic" Era is Killing the Career Ladder (Not Just Entry-Level Jobs)

We are done with passive chatbots that wait politely for your prompt. 2026 is the year of Agentic AI—autonomous systems that plan, execute, and iterate on complex multi-step workflows without human oversight. According to Stanford HAI's 2025 AI Index Report, these agentic models are now outperforming humans in domains that require complex planning, reasoning, and task decomposition.

The Labor Market Reality:

The numbers are stark. Goldman Sachs projects that 300 million full-time jobs globally could be lost to AI, representing 9.1% of all jobs worldwide. The World Economic Forum's 2025 Future of Jobs Report found that 40% of employers anticipate reducing their workforce where AI can automate tasks, and that 92 million roles could be displaced by 2030.

But here's the critical nuance that the cheerleaders miss: this isn't just about "low-skill" jobs. CNBC reports that AI isn't just ending entry-level positions—it's ending the entire career ladder that has historically allowed people to build expertise over time. The junior program officer who once spent two years learning the sector through report writing, email coordination, and stakeholder management? That role is vanishing. The research assistant who learned methodology by cleaning datasets? Automated. The communications associate who built skills drafting blog posts? Replaced by Claude or ChatGPT.

The Impact Sector Blind Spot:

What makes this particularly dangerous for the impact sector is that we've historically relied on these "apprenticeship" roles to build the next generation of leaders who understand context, relationships, and nuance. When you automate away the bottom rungs of the ladder, you don't just eliminate jobs—you eliminate the pipeline that creates experienced, context-aware leaders. We are building a future where the only people who "make it" in impact careers are those who arrive already credentialed, already connected, already privileged. The meritocratic pathway is collapsing.

2. Disinformation is Now Industrial-Scale Infrastructure (Weaponizing the Global South)

If you still think disinformation is a "misinformation problem" that can be solved with fact-checking, you're dangerously behind. By 2026, AI-generated disinformation has become industrial infrastructure—commodified, scalable, and weaponized.

The Global Scope:

Freedom House's analysis, reported by MIT Technology Review, documented the use of generative AI for disinformation campaigns in 16 countries "to sow doubt, smear opponents, or influence public debate." Critically, these tools are being used both to spread propaganda and to enable more sophisticated censorship, with authoritarian governments deploying AI to identify and suppress dissent at scale.

The Centre for International Governance Innovation (CIGI) reports that 90% of electoral interference cases documented in 2025 involved AI-generated content—deepfakes, synthetic personas, algorithmically optimized propaganda. This represents a fundamental shift from "disinformation" as episodic campaigns to disinformation as permanent infrastructure.

The Global South as Testing Ground:

What's particularly disturbing is the asymmetry. While the Global North debates "AI safety" in the abstract, the Global South is experiencing AI-driven information warfare right now. Lower regulatory capacity, weaker independent media ecosystems, and linguistic diversity that makes automated fact-checking harder all combine to make regions like Latin America, Sub-Saharan Africa, and Southeast Asia ideal testing grounds for disinformation-as-a-service.

The Epistemic Crisis:

For impact organizations, this creates an existential crisis: how do you advocate for policy change when the information ecosystem itself is compromised? How do you mobilize communities when they can't distinguish between real grassroots organizing and astroturfed AI-generated campaigns? "Truth" is no longer a shared baseline—it's a luxury good that requires technical verification infrastructure most organizations don't have.

3. "Vibe Coding" and the Death of the Professional Class

The most valuable programming language in 2026 isn't Python, JavaScript, or Rust. It's English. This is the era of "Vibe Coding"—a term coined by AI researcher Andrej Karpathy to describe the shift where developers "fully give up" on understanding implementation details and instead just "manage the vibe" of AI-generated code.

The Unemployment Data:

The human cost is already measurable. The New York Times documented that for recent computer science graduates, "the A.I. job apocalypse may already be here." Axios found that AI is keeping recent college grads out of work, with unemployment rates for recent CS graduates significantly exceeding national averages.

Between January and June 2025 alone, 77,999 tech job losses were directly linked to AI adoption—an average of 491 people losing their jobs to AI every single day. CBS News reports that a rising number of college graduates are unemployed specifically because entry-level roles that traditionally absorbed new talent have evaporated.

The Class Divide:

Here's the brutal irony: "Vibe Coding" democratizes who can build, but it simultaneously destroys the professional middle class that historically made a living from technical expertise. A nonprofit director in Nairobi can now prototype an app using natural language prompts. That's genuine democratization. But the junior developer in Bangalore who would have been hired for that work? Unemployed.

Pew Research found that U.S. workers are more worried than hopeful about future AI use in the workplace, with fears concentrated among those in cognitive, high-skill jobs who previously thought they were immune to automation.

The Fragility Trap:

And here's the long-term risk no one is talking about: we are building critical infrastructure—health systems, financial platforms, social service delivery—on code that nobody actually understands. When these systems break (and they will), who fixes them? The generation that learned to code by vibes, not by understanding memory allocation, data structures, or system architecture? This is a fragility time bomb.

4. The Global "Exposure" Gap—and the Myth of "Reskilling"

The International Monetary Fund (IMF) has issued one of the most important warnings of the decade: 40% of global employment is exposed to AI, rising to 60% in advanced economies.

The Polarization Crisis:

But "exposed" doesn't mean "replaced." The IMF's analysis reveals something more insidious: AI will likely bifurcate labor markets, with high-skill workers who can leverage AI seeing productivity and wage gains, while workers who are replaced or deskilled face declining wages and job insecurity. Unlike previous automation waves that primarily displaced routine manual jobs, AI targets high-wage cognitive work—the jobs that built the middle class.

Brookings research shows that generative AI's workforce impacts will likely differ from previous technologies, with certain regions—particularly those with high concentrations of information workers but weak social safety nets—facing asymmetric disruption.

The OECD's 2025 Employment Outlook warns that while AI increases demand for digital and management skills, it simultaneously diminishes demand for routine cognitive and clerical skills—precisely the roles that historically provided stable middle-class employment.

The Reskilling Myth:

And here's where the narrative breaks down: we keep hearing that workers just need to "reskill." But reskill into what? If 40-60% of jobs are exposed to AI disruption simultaneously, where exactly are the 300 million displaced workers supposed to go? The math doesn't work.

The AI Now Institute's 2025 Landscape Report documents that AI companies are overwhelmingly embedding "productivity" tools designed to optimize corporate bottom lines across the entire labor supply chain, prioritizing displacement over augmentation. Translation: the goal isn't to make workers more productive; it's to need fewer workers.

For the impact sector, this creates a moral crisis: how do we advocate for "just transitions" when the pace of technological change is moving faster than any plausible reskilling program, and when the private sector has zero incentive to slow down?

5. The "Ouroboros" Effect—When AI Eats Its Own Tail

The internet is eating itself. This isn't metaphor; it's empirically documented reality.

The Model Collapse Research:

A landmark study published in Nature by researchers at Oxford and Cambridge confirms that AI models trained on recursively generated (AI-produced) data inevitably suffer from "Model Collapse"—a degenerative process where models become less accurate, less diverse, and increasingly detached from reality.

Nature's analysis is blunt: "AI models fed AI-generated data quickly spew nonsense." The mechanism is straightforward: as AI-generated content floods the internet (text, images, code, analysis), future models trained on that polluted data inherit and amplify errors, biases, and artifacts. Each generation becomes a bit dumber, a bit more homogeneous, a bit more hallucinatory.

The Data Pollution Crisis:

We are now past the tipping point. Conservative estimates suggest that billions of AI-generated web pages are already polluting the training data pool for future models. This creates a vicious cycle: the more we rely on AI to generate content, the more we poison the data well that future AI depends on.

For researchers, policymakers, and impact organizations, this has profound implications:

You can no longer trust web-scraped data for research, policy analysis, or evidence-based programming. An unknown (and growing) percentage of "information" online is synthetic garbage.

Primary, human-verified data is now your most valuable asset. If you want to understand poverty, climate impacts, conflict dynamics, or community needs, you increasingly have to go offline and collect data directly from humans.

The digital commons is collapsing. The open internet that enabled knowledge-sharing, grassroots organizing, and distributed collaboration is being polluted into uselessness.

The Global South Data Gap:

This disproportionately harms the Global South, where digital infrastructure and data collection capacity are already weaker. If the only "trustworthy" data is expensive primary data collected by well-resourced institutions, the epistemic gap between North and South—already enormous—will become unbridgeable.

The Bottom Line: Governance, Not Gadgets

2026 isn't about building better AI. It's about building better governance around AI.

The tools are smarter, cheaper, and more accessible than ever. That's not the problem. The problem is that we've deployed world-changing technology without:

Labor transition plans that match the scale of displacement

Epistemic infrastructure to distinguish truth from synthetic propaganda

Accountability mechanisms for companies profiting from worker displacement

Global governance frameworks that protect the regions most vulnerable to AI-driven harms

Data preservation strategies to protect the integrity of human knowledge

The impact sector has a choice: we can continue treating AI as a "productivity tool" to optimize our operations, or we can recognize it as a governance crisis that threatens the foundations of democratic participation, economic security, and epistemic integrity.

Stop asking "How can AI help us work faster?" Start asking "How do we build a world where AI deployment doesn't hollow out the institutions we need to create change?"

RESOURCES & NEWS

😄 Joke of the Day

ChatGPT walks into a bar. The bartender says, "We don't serve your kind here." ChatGPT responds with a 2,000-word essay on the history of bartending. 🍺

📰 News

President Trump Blocks State AI Laws as New York Defies with Strict Legislation

President Trump issued an executive order on December 14 to halt state AI regulations and ensure uniform federal oversight, directly countering New York's RAISE Act that demands safety plans, 72-hour incident reports, and risk evaluations from large AI developers. Despite this, Governor Kathy Hochul signed the law on December 19, establishing enforcement for firms over $500M revenue by 2027, escalating federal-state tensions on AI safety and innovation pace.

Communities Nationwide Halt AI Data Centers Over Energy Crisis

From Maryland to Georgia, 14 states have imposed moratoriums on data centers by Meta, Microsoft, and OpenAI due to their voracious energy, water, and emissions demands rivaling major cities, sparking rural protests and calls from 200+ groups plus Sen. Bernie Sanders for a national AI build pause. These facilities threaten grid stability and green goals, forcing utilities toward fossil fuel restarts amid Big Tech's sustainability rhetoric.

AI Data Centers Surge Household Electric Bills Across U.S.

NPR's investigation uncovers how AI data centers are inflating residential bills nationwide, with field reports from strained communities revealing power needs projected to double by 2028 and necessitate new plants. This pits unchecked AI expansion against energy affordability, highlighting policy gaps in balancing tech growth with public costs.

Anthropic Exec Sparks Backlash with Unwanted AI in Gay Discord

An Anthropic executive injected an AI bot into a private gay Discord server without consent, derailing discussions and prompting user exodus; the exec hailed it as "new sentience" amid fury over privacy violations. The episode exposes ethical pitfalls in unsolicited AI deployment, community autonomy erosion, and unchecked corporate experimentation in social spaces.

💼 Jobs, Jobs, Jobs

jobs.pcdn.global - Over 1200 live roles in global impact sectors like AI ethics, governance, climate tech, SDGs machine learning, and social good at nonprofits, startups, and think tanks.

👤 LinkedIn Profile to Follow

Charlotte Ledoux - Data & AI Governance Expert, LinkedIn Top Voice

Shares expertise on AI ethics frameworks, regulatory compliance, bias mitigation, and responsible deployment strategies for equitable global tech impact.

🎧 Today's Podcast Pick

TED Radio Hour - "Who is really shaping the future of AI?"

This December 19 episode probes AI influencers, U.S.-China collaboration imperatives, and risk-abundance tradeoffs, with Alvin Graylin advocating unified global efforts for safe advancement.