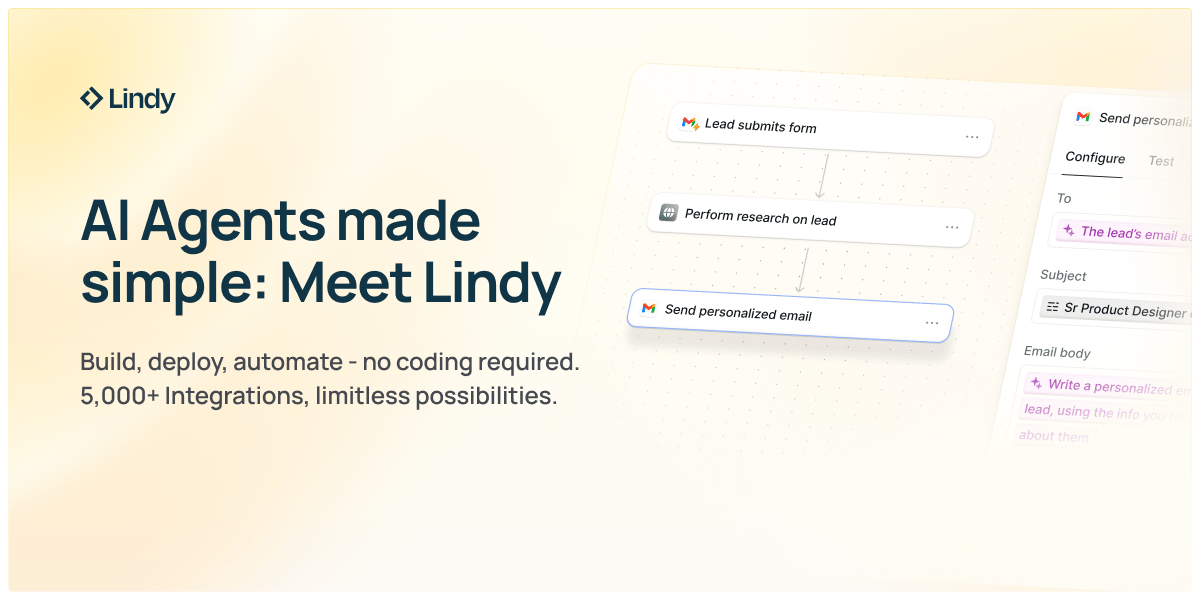

The Simplest Way To Create and Launch AI Agents

Imagine if ChatGPT and Zapier had a baby. It’d be ridiculously smart… and probably named Lindy.

With Lindy, you can spin up AI agents in minutes to handle all the stuff you’d rather not—like lead qual, cold outreach, web scraping, and other “ugh” tasks. It’s like hiring a whole team that never sleeps, never complains, and never asks for PTO.

Lindy’s agents are ready to take on support tickets, data entry, lead enrichment, scheduling, and more. All so you can get back to the fun part: building your business.

Ready to hand off the busy work? Build your first AI agent today and join thousands of businesses already saving time (and sanity) with automation that actually works.

Ensuring AI Works for Humanity

The rapid advancement of artificial intelligence presents humanity with both unprecedented opportunities and significant challenges. As AI systems become increasingly sophisticated, the critical question isn't whether powerful AI can be built, but whether it will serve humanity's best interests. The window for establishing robust governance frameworks and safety measures is narrowing, making responsible AI development an urgent priority.

Key Questions Defining Our Path Forward

The AI community and policymakers must grapple with fundamental questions that will shape our technological trajectory. How can meaningful human control be maintained over increasingly autonomous systems? This question goes beyond simple on-off switches to encompass algorithmic transparency, decision-making accountability, and preserving human agency in critical domains. What constitutes "severe harm" from AI systems, and who determines these thresholds? Recent research from the International AI Safety Report shows that 96 AI experts from 33 countries are working to establish shared understanding of AI risks and mitigation strategies.

How do you balance innovation with safety without stifling beneficial progress? The tension between rapid development and thorough safety testing has become particularly acute as companies race toward artificial general intelligence (AGI). Google DeepMind's recent 145-page safety paper warns that AGI could arrive by 2030 and potentially cause "severe harm" to humanity, while emphasizing the need for proactive safety measures.

Can global coordination on AI governance be achieved without hindering democratic values? The Global Partnership on Artificial Intelligence, now encompassing 44 countries, represents efforts to foster international cooperation, but challenges remain in aligning diverse political systems and regulatory approaches.

Critical Challenges Requiring Immediate Attention

The Alignment Problem remains perhaps the most pressing technical challenge. As AI systems become more capable, ensuring they pursue intended goals without harmful shortcuts becomes exponentially difficult. DeepMind's safety research emphasizes four key risk categories: misuse, misalignment, accidents, and structural risks. The company has established an AGI Safety Council led by co-founder Shane Legg to address these challenges proactively.

The Speed vs. Safety Dilemma intensifies as competitive pressures drive rapid deployment. The Future of Life Institute's 2025 AI Safety Index evaluates leading AI companies on safety practices, revealing significant gaps in transparency and safety frameworks. Only some companies have published comprehensive safety policies, with many lacking robust whistleblowing protections and third-party evaluations.

Democratic Participation and Inclusion in AI governance faces systematic barriers. Research shows that AI development remains concentrated among a small number of companies and countries, raising concerns about whose values are embedded in these systems. The Feminist AI Research Network highlighted in recent GPAI reports emphasizes the importance of addressing systemic gender, racial, and intersectional biases in AI technologies.

Economic Disruption and Inequality pose immediate social challenges. While AI promises significant productivity gains, the benefits may not be equally distributed. The risk of mass unemployment combined with concentration of AI capabilities among few actors could exacerbate existing inequalities and social tensions.

Essential Organizations and Resources for AI Safety

Research and Safety Organizations: The Center for AI Safety (CAIS) focuses specifically on reducing societal-scale risks through safety research and field-building. The Alignment Research Center (ARC), founded by Paul Christiano, develops scalable alignment strategies for future ML systems. FAR.AI serves as a research and education nonprofit facilitating technical breakthroughs in AI safety.

Government and International Initiatives: The UK AI Safety Institute (AISI) conducts research on advanced AI capabilities and risks while working with developers to ensure responsible development. The AI for Good Global Summit organized by ITU and 40+ UN agencies focuses on leveraging AI for sustainable development goals. The Cloud Security Alliance's AI Safety Initiative provides practical guidance and tools for organizations implementing AI solutions.

Academic and Training Programs: The ML Alignment & Theory Scholars (MATS) Program connects talented scholars with top AI alignment mentors, having produced 116 research publications with over 5,000 citations. The Partnership on AI brings together academic, civil society, and industry organizations to address responsible AI development.

Technical Resources and Frameworks: AWS Responsible AI provides core dimensions including fairness, explainability, privacy, safety, and governance with practical implementation tools. Google's Responsible Generative AI Toolkit offers guidance for designing, building, and evaluating AI models responsibly. IBM's AI Governance framework emphasizes transparency, accountability, and human oversight.

Standards and Policy Organizations: The IEEE Ethically Aligned Design initiative provides comprehensive guidance on building ethical AI systems. The Montreal Declaration for Responsible AI emphasizes collaborative development of responsible AI principles. The European Commission's Ethics Guidelines for Trustworthy AI offers detailed frameworks for ethical AI development.

AI for Impact Opportunities

Create How-to Videos in Seconds with AI

Stop wasting time on repetitive explanations. Guidde’s AI creates stunning video guides in seconds—11x faster.

Turn boring docs into visual masterpieces

Save hours with AI-powered automation

Share or embed your guide anywhere

How it works: Click capture on the browser extension, and Guidde auto-generates step-by-step video guides with visuals, voiceover, and a call to action.

News & Resources

😄 Joke of the Day

Why did the AI become a gardener?

Because it finally learned how to root out problems! 🌱🤖

🌍 News

Big Tech and U.S. politics — A new podcast episode explores how major tech companies, including AI giants, are shaping political discourse and influence under the Trump administration. 🎧 Listen here

AI chatbots in health & therapy — PBS reports on experts’ concerns and guidance for people using AI chatbots for medical or mental health advice. Key takeaway: useful for support, but not a replacement for professional care. 📖 Read more

AI climate predictions — Nature highlights how next-gen AI models are making climate forecasting faster and more precise—potentially improving disaster preparedness and environmental planning. 🌍 Explore here

💼 Jobs

Looking to make an impact? Explore opportunities on Wellfound—a leading platform for startups, including many working with ethical AI, climate tech, and social innovation.

🔗 LinkedIn Connection

Lenny Rachitsky — Former Airbnb growth lead turned product and strategy thought leader. Lenny shares deep insights on product management, growth strategy, and the ethical use of technology. A must-follow for anyone in AI product building. Connect here