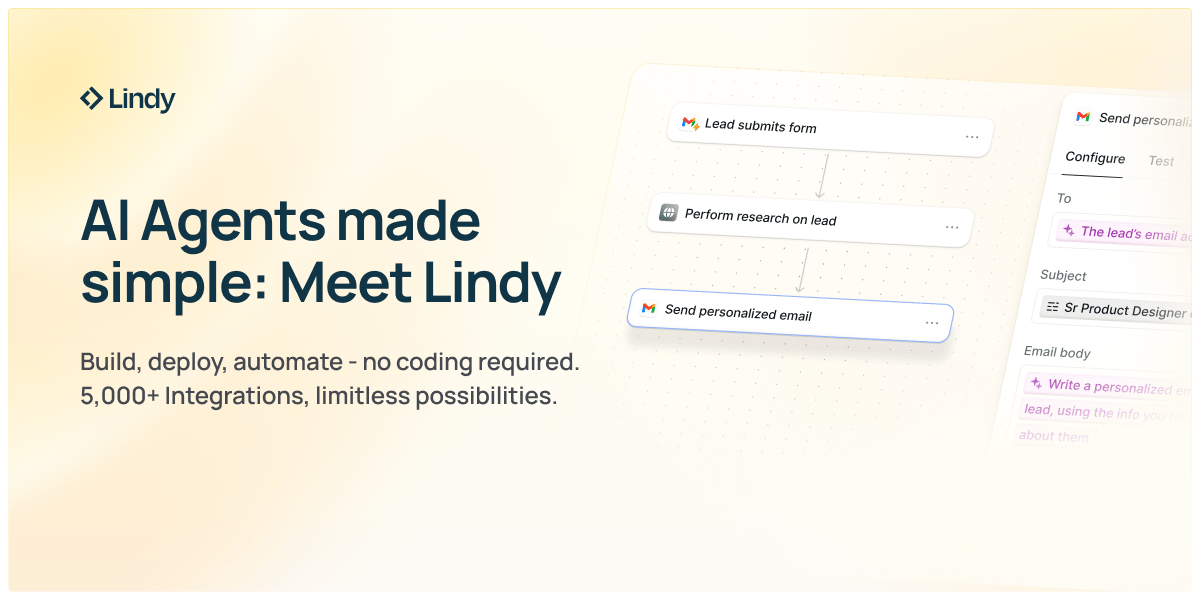

The Simplest Way To Create and Launch AI Agents

Imagine if ChatGPT and Zapier had a baby. It’d be ridiculously smart… and probably named Lindy.

With Lindy, you can spin up AI agents in minutes to handle all the stuff you’d rather not—like lead qual, cold outreach, web scraping, and other “ugh” tasks. It’s like hiring a whole team that never sleeps, never complains, and never asks for PTO.

Lindy’s agents are ready to take on support tickets, data entry, lead enrichment, scheduling, and more. All so you can get back to the fun part: building your business.

Ready to hand off the busy work? Build your first AI agent today and join thousands of businesses already saving time (and sanity) with automation that actually works.

I've been diving deep into the latest AI developments, and after spending considerable time testing what's being touted as "ChatGPT 5", I have some honest observations that I think our changemaker community needs to hear.

The Good, The Bad, and The Overhyped

Don't get me wrong – there are genuinely impressive improvements in the latest ChatGPT iterations. The voice capabilities have taken a significant leap forward, processing speeds have improved dramatically, and the models can handle much more sophisticated prompting techniques. For those of us working on complex social impact projects, the enhanced reasoning capabilities of models like o1 can be genuinely helpful for strategic thinking and problem-solving.

However, here's what OpenAI isn't emphasizing in their marketing blitz: reliability hasn't improved as much as the hype suggests. In fact, some concerning research shows that AI hallucinates more frequently as it gets more advanced, with studies indicating that newer AI models are showing higher hallucination rates than their predecessors. When working on impact-driven projects where accuracy matters, this is a significant problem that can undermine trust and effectiveness. I’ve seen this in my own experimentation to date, lots and lots of hallucinations.

Are AI Launches Becoming the New iPhone Launches?

This brings me to a broader point that's been nagging at me. Are AI model releases following the "iPhone trajectory"? Think about it: the first few iPhone iterations were genuinely revolutionary – introducing touchscreens, the App Store, and fundamentally changing how humans interact with technology. But in recent years, iPhone launches have become exercises in incremental improvements that generate massive buzz without delivering transformative change.

The same pattern seems to be emerging in AI. The jump from GPT-2 to GPT-3, and even to GPT-4, felt genuinely transformative. But now researchers are observing what they call "diminishing marginal returns" – each new iteration requires exponentially more resources for increasingly smaller improvements. The economic principle of diminishing returns that has guided business strategy for decades is catching up with AI development, and the industry is starting to hit the plateau where throwing more compute and data at the problem yields smaller gains.

Of course there are likely to still be huge advancements and quantum leaps in LLM development and entirely new ways of building AI systems.

Why Perplexity Has Become My Go-To

This is exactly why I've shifted to using Perplexity as my primary AI tool for impact work. The platform offers something that's become increasingly rare: low hallucination rates and transparent sourcing. Recent comprehensive testing shows Perplexity achieving significantly better citation accuracy compared to ChatGPT and other models, with research indicating notably lower error rates compared to other platforms.

What makes Perplexity particularly valuable for our community is its approach to real-time information gathering with proper citations. When researching policy implications, funding opportunities, or impact measurement strategies, having sources you can verify is crucial. Plus, Perplexity Pro gives you access to multiple models (ChatGPT, Claude, Gemini, Grok, and their own Sonar models) in one platform, allowing you to cross-reference responses and choose the best tool for each task.

Also perplexity Labs is amazing and the best I’ve found at doing deep and sourced research for all types of content from curious exploration to blogs.

LMArena: The Most Fun You'll Have Testing AI Models

Before making decisions about which AI tools to integrate into your impact work, I highly recommend spending some quality time with LMArena. This platform is absolutely brilliant.

The concept is deceptively simple but incredibly powerful. You submit a prompt, and two anonymous AI models respond. You don't know which models you're testing – could be ChatGPT vs. Claude, or Gemini vs. some open-source model (in total the platform has more than 120 models). you've never heard of. You read both responses, vote for the better one, and only then does the system reveal which models you were comparing. It's like the ultimate blind taste test for AI.

But here's where it gets really interesting: LMArena uses the same Elo rating system as chess tournaments to rank models based on millions of user votes. When a lower-rated model beats a higher-rated one, it gains more points than if the roles were reversed. The mathematics behind it are elegant, and the real-time leaderboard updates make it genuinely exciting to watch model rankings shift as new votes come in.

The platform has become so influential that it recently raised $100 million in seed funding led by Andreessen Horowitz, with over 1 million monthly visitors participating in model comparisons. The insights from real user voting often reveal performance differences that don't show up in traditional benchmarks.

What makes LMArena particularly fascinating is how it's exposed some uncomfortable truths about the AI industry. Research shows that major companies like Meta tested 27 versions of Llama-4 privately before publishing only their best-performing version, while Google reportedly tested 10 variants of Gemini. This "bench-maxing" practice creates performance illusions on public leaderboards.

The platform even has specialized arenas now – there's a WebDev Arena where you can test AI models' coding abilities by having them build websites and web applications. Users have tested everything from minesweeper games to social media clones, and the results are both hilarious and revealing.

I’ve shown the model in some courses and workshops I teach and almost everyone has a lot of fun trying it out.

The Bottom Line for Changemakers

As impact-driven professionals, being thoughtful about AI adoption is crucial. The hype cycles and marketing push from major tech companies shouldn't drive decisions – effectiveness and reliability should. While the latest ChatGPT improvements are real, they're not the revolutionary leap being promised. Meanwhile, platforms like Perplexity offer more reliable, citation-backed responses that are better suited for the kind of rigorous, evidence-based work our community demands.

Take Action:

Try Perplexity Pro with our affiliate link for a free month: https://perplexity.ai/pro?referral_code=DKKDN6PD(if you sign up our team also gets a free extra month).

Spend an afternoon testing models at https://lmarena.ai/ – trust me, it's way more fun than it sounds, and you'll gain genuine insights about which models work best for your specific use cases

What AI tools are working best for your impact projects? Hit reply and let me know your experiences or fill out the super quick form below and add your tools.

Share your feedback on the AI for Impact Newsletter

AI for Impact Opportunities

Choose the Right AI Tools

With thousands of AI tools available, how do you know which ones are worth your money? Subscribe to Mindstream and get our expert guide comparing 40+ popular AI tools. Discover which free options rival paid versions and when upgrading is essential. Stop overspending on tools you don't need and find the perfect AI stack for your workflow.

News & Resources

😄 Joke of the Day

Why did the judge bring a neural network to court?

Because they wanted sentencing with context!

🌍 News Highlights

Judiciary and AI: Judges are experimenting with AI assistance in courtroom settings—balancing potential gains in consistency with concerns around transparency, bias, and public trust.

AI Race in India: Perplexity AI and Google are offering free premium AI search services in India, with Perplexity partnering with Airtel’s 360 million subscribers and Google offering Gemini access for students.

A Kentucky county launched an AI tool called Sensemaker to gather and analyze residents’ thoughts in their own words—beyond traditional town hall formats. The initiative, part of Judy Woodruff’s “America at a Crossroads” series, revealed more common ground among community members than expected, demonstrating how AI can enhance civic engagement in a thoughtful, inclusive way.

Watch the full segment: How a Kentucky community is using AI to help people find common ground (PBS NewsHour)

🎓 Career Resource

UNU Jobs – Professional opportunities at the United Nations University for those passionate about leveraging AI for sustainable development, policy, and global impact.

🔗 LinkedIn Connection

Seungah Jeong – Dynamic, innovative, purpose-driven leader at the intersection of AI, ethics, and equity, with expertise in machine learning governance and inclusive design.